promise-crawler

Version:

Promise support for node-crawler (Web Crawler/Spider for NodeJS + server-side jQuery)

43 lines (31 loc) • 1.46 kB

Markdown

# promise-crawler

Promise support for node-crawler (Web Crawler/Spider for NodeJS + server-side jQuery)

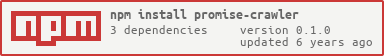

[](https://nodei.co/npm/promise-crawler/)</br>

[](https://travis-ci.org/AppliedSoul/promise-crawler) [](https://coveralls.io/github/AppliedSoul/promise-crawler?branch=master) [](https://greenkeeper.io/)</br>

Nodejs library for website crawling using [node-crawler](https://github.com/bda-research/node-crawler) but on bluebird promises.

#### Install using npm:

```

npm i promise-crawler --save

```

Example:

```javascript

const PromiseCrawler = require('promise-crawler');

//Initialize with node-crawler options

const crawler = new PromiseCrawler({

maxConnections: 10,

retries: 3

});

//perform setup and then use it

crawler.setup().then(() => {

// makes request with node-crawler queue options

crawler.request({

url: 'http://example.com'

}).then((res) => {

//server side response parsing using cheerio

let $ = res.$;

console.log($("title").text());

// destroy the instance

process.nextTick(() => crawler.destroy())

})

});

```